Download the library from github KinectPV2

Current Version 0.7.5 (August 15th 2015)

Library examples

- TestImages, Test all Frames/Images for the Kinect.

- SkeletonMaskDepth, Skeleton positions are mapped to match the depth and body index frames.

- SkeletonColor, Skeleton is mapped to match the color frame.

- Skeleton3d, 3d Skeleton example needs love.

- SimpleFaceTracking, simple face tracking with mode detection.

- PointCloudOGL, Point cloud depth render using openGL and shaders.

- PointCloudDepth, point cloud in a single 2d Image and threshold example.

- PointCloudColor, Point cloud in color, using openGL and shaders.

- MaskTest, Body Index test, and body index with depth.

- Mask_findUsers, find number of users base on body index data

- MapDepthToColor, depth to color mapping, depth frame is aligned with color frame.

- HDFaceVertex, Face vertices are match with the HD color frame.

- HDColor, 1920 x 1080 RGB frame.

- DepthTest, Depth test with raw depth data.

- CoordinateMapperRGBDepth, example broken, check 0.7.2 version.

- RecordPointCloud, simple OBJ recording of the point cloud positions.

- openCV Examples

- Simple live capture

- Find user contours with depth frame or with bodyIndex frame

Code!!!

To get started with the library, you need to import KinectPV2 library and initialize the class.

import KinectPV2.*;

KinectV2 kinect;

void setup() {

size(900, 768);

kinect = new KinectV2(this);

kinect.enableColorImg(true);

kinect.enableSkeleton(true);

kinect.enableInfraredImg(true);

kinect.init();

}

To activate various functionalities of the K4W2, just call the enable methods before the kinect.init() function.

void enableColorImg(boolean toggle);

void enableDepthImg(boolean toggle);

void enableInfraredImg(boolean toggle);

void enableBodyTrackImg(boolean toggle);

void enableDepthMaskImg(boolean toggle);

void enableLongExposureInfrared(boolean toggle);

void enableSkeleton(boolean toggle);

void enableSkeletonColorMap(boolean toggle);

void enableSkeleton3dMap(boolean toggle);

void enableSkeletonDepthMap(boolean toggle);

void enableFaceDetection(boolean toggle);

void enableHDFaceDetection(boolean toggle);

void enablePointCloud(boolean toggle);

void enableCoordinateMapperRGBDepth(boolean toggle);

Obtain frame images as processing PImage from the kinect device

PImage getColorImage();

PImage getDepthImage();

PImage getInfraredImage();

PImage getBodyTrackImage();

PImage getLongExposureInfrared();

Skeleton [] getSkeleton();

Raw Data

To access the raw data from each Image, activate them in the setup to process the raw data.

void activateRawColor(boolean toggle);

void activateRawDepth(boolean toggle);

void activateRawDepthMaskImg(boolean toggle);

void activateRawInfrared(boolean toggle);

void activateRawBodyTrack(boolean toggle);

void activateRawLongExposure(boolean toggle);

Obtain the raw data.

int [] getRawDepth();

int [] getRawColor();

int [] getRawInfrared();

int [] getRawBodyTrack();

int [] getRawLongExposure();

int [] getRawPointCloudDepth() // as int [] oppose of FloatBuffer

Skeleton skeleton = kinect.getSkeleton();

KJoint [] skeleton[i].getJoints(); //JOINTS Example

Color Image example

Simple example in how to obtain the 1920 x 1080 color image from the Kinect

import KinectPV2.*;

KinectV2 kinect;

void setup() {

size(1920, 1080);

kinect = new KinectV2(this);

kinect.enableColorImg(true);

kinect.init();

}

void draw() {

background(0);

image(kinect.getColorImage(), 0, 0);

}

Full HD Color (RGB) example

Depth,infrared, mask example

The depth, infrared and mask(body tracking) are captured with only one resolution 512 x 424 pixels, a higher resolution than the previous Kinect. Obtain the depth, infrared and mask just activate them in the setup and then initialize the KinectPV2 class.

import KinectPV2.*;

KinectV2 kinect;

void setup() {

size(512*3, 424);

kinect = new KinectV2(this);

kinect.enableDepthImg(true);

kinect.enableInfraredImg(true);

kinect.enableBodyTrackImg(true);

kinect.init();

}

void draw() {

background(0);

image(kinect.getDepthImage(), 0, 0);

image(kinect.getBodyTrackImage(), 512, 0);

image(kinect.getInfraredImage(), 512*2, 0);

} - Depth, infrared and Color example

- Mask example

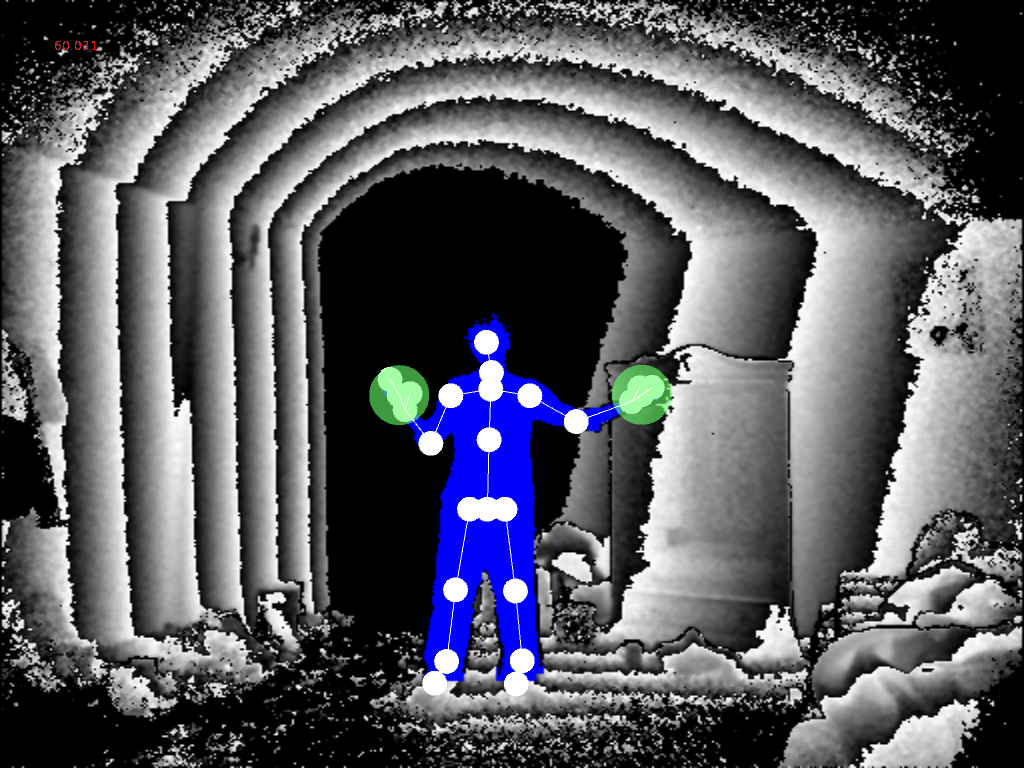

Skeleton

Access the skeleton positions from the Kinect, the skeleton detection supports up to 6 users with 25 joints and hand three states open, close and lasso.

Depending on your application you can either activate the color map skeleton or depth map skeleton. What this does is maps the skeleton to the RGB Image or the Depth Image. So each position of the skeleton corresponds to the RGB Image or the Depth Image.

import KinectPV2.*;

KinectV2 kinect;

void setup() {

size(1024, 768);

kinect = new KinectV2(this);

kinect.enableDepthMaskImg(true);

kinect.enableSkeletonDepthMap(true);

kinect.init();

}

void draw() {

background(0);

image(kinect.getDepthMaskImage(), 0, 0);

//get the skeletons as an Arraylist of KSkeletons

ArrayList<KSkeleton> skeletonArray = kinect.getSkeletonDepthMap();

//get the skeleton as an Arraylist mapped to the color frame

//ArrayList<KSkeleton> skeletonArray = kinect.getSkeletonColorMap();

//individual joints

for (int i = 0; i < skeletonArray.size(); i++) {

KSkeleton skeleton = (KSkeleton) skeletonArray.get(i);

KJoint[] joints = skeleton.getJoints();

color col = skeleton.getIndexColor();

fill(col);

stroke(col);

drawBody(joints);

drawHandState(joints[KinectPV2.JointType_HandRight]);

drawHandState(joints[KinectPV2.JointType_HandLeft]);

}

}

}

When doing this kind of mapping, the skeleton only produces 2 coordinates (x, y). If you need the z coordinate, is possible to obtain it from the pixel position of the depth Image or the color Image. Another solution is to use the 3D Skeleton. Or another way is to compare the distance between x and y position to produce a changing depth value.

- SkeletonMaskDepth for Sekeleton Depth Map

- SkeletonColor for Sekeleton Color Map

- Skeleton3d for 3D skeleton with orientation

Face Detection

The kinect is capable of face tracking with mode detection up-to 6 users. The face points information are mapped to the color frame of the kinect. Its also possible to obtain information about the mode of the user such as happy, unhappy, turning left, turning right, eyes close and many more.

kinect.enableColorImg(true);

kinect.enableFaceDetection(true);

void draw() {

background(0);

image(kinect.getColorImage(), 0, 0);

//get the face data as an ArrayList

ArrayList<FaceData> faceData = kinect.getFaceData();

for (int i = 0; i < faceData.size(); i++) {

FaceData faceD = faceData.get(i);

if (faceD.isFaceTracked()) {

//obtain the face data from the colo frame

PVector [] facePointsColor = faceD.getFacePointsColorMap();

KRectangle rectFace = faceD.getBoundingRectColor();

FaceFeatures [] faceFeatures = faceD.getFaceFeatures();

//get the color of the user

int col = faceD.getIndexColor();

fill(col);

//display the face freatures and states

for (int j = 0; j < 8; j++) {

int st = faceFeatures[j].getState();

int type = faceFeatures[j].getFeatureType();

String str = getStateTypeAsString(st, type);

fill(255);

text(str, nosePos.x + 150, nosePos.y - 70 + j*25);

}

//draw a rectangle around the face

stroke(255, 0, 0);

noFill();

rect(rectFace.getX(), rectFace.getY(), rectFace.getWidth(), rectFace.getHeight());

}

}

- SimpleFaceTracking example

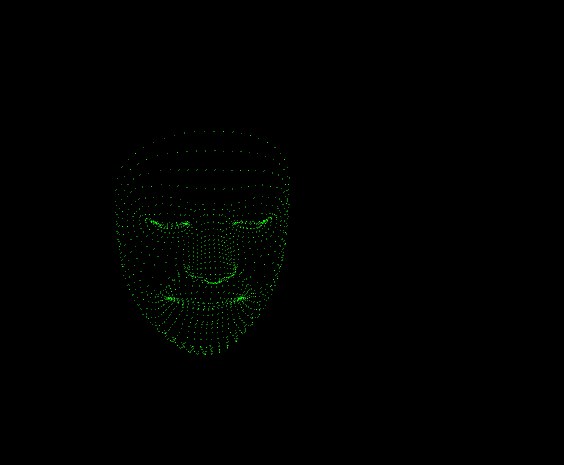

HDFace Vertex

The library can access the HDFace detection information of 6 users. Wit the detection is possible to obtain up-to 1347 2d vertex point of face. The vertex positions are mapped to the RGB frame.

Enable the HDFace detection.

kinect = new KinectPV2(this);

kinect.enableHDFaceDetection(true);

kinect.init();

Draw the HDFace vertices.

void draw() {

// Draw the color Image

image(kinect.getColorImage(), 0, 0);

//Obtain the Vertex Face Points

// 1347 Vertex Points for each user.

ArrayList<HDFaceData> hdFaceData = kinect.getHDFaceVertex();

for (int j = 0; j < hdFaceData.size(); j++) {

//obtain a the HDFace object with all the vertex data

HDFaceData HDfaceData = (HDFaceData)hdFaceData.get(j);

if (HDfaceData.isTracked()) {

//draw the vertex points

stroke(0, 255, 0);

beginShape(POINTS);

for (int i = 0; i < KinectPV2.HDFaceVertexCount; i++) {

float x = HDfaceData.getX(i);

float y = HDfaceData.getY(i);

vertex(x, y);

}

endShape();

}

}

HDFace Detection example.

Point Cloud to depth map

To render the point cloud (depth amap) at 60fps some openGL calls are needed. Most of the work is done by using openGL vertexs buffers. First, obtain the float buffer with all depth positions (x, y, z) mapped to the camera space. The float buffer is basically a big array of floats. The good thing about the FloatBuffer class is that you send it directly to openGL vertex buffer. The GPU does all the work of rendering the float buffer into the scene.

FloatBuffer pointCloudBuffer = kinect.getPointCloudDepthPos();After creating the FloatBuffer with the point could positions, just draw the point cloud buffer.

//data size

int vertData = kinect.WIDTHDepth * kinect.HEIGHTDepth;

pgl = beginPGL();

sh.bind();

vertLoc = pgl.getAttribLocation(sh.glProgram, "vertex");

pgl.enableVertexAttribArray(vertLoc);

pgl.vertexAttribPointer(vertLoc, 3, PGL.FLOAT, false, 0, pointCloudBuffer);

pgl.drawArrays(PGL.POINTS, 0, vertData);

pgl.disableVertexAttribArray(vertLoc);

sh.unbind();

endPGL();

Complete code Point Cloud

Point Cloud Threshold

To access the depth distance information, you can use the point cloud data to extract distance data between objects, as well the actual distance from the kinect to any user.

//Distance Threshold

float maxD = 4.0f; //meters

float minD = 1.0f;

void setup() {

size(512*2, 424, P3D);

kinect = new KinectPV2(this);

//Enable point cloud

kinect.enableDepthImg(true);

kinect.enablePointCloud(true);

kinect.init();

}

void draw() {

background(0);

image(kinect.getDepthImage(), 0, 0);

/* Get the point cloud as a PImage

* Each pixel of the PointCloudDepthImage correspondes to the value

* of the Z in the Point Cloud or distances, the values of

* the Point cloud are mapped from (0 - 4500) mm to gray color (0 - 255)

*/

image(kinect.getPointCloudDepthImage(), 512, 0);

//raw Data int valeus from [0 - 4500]

int [] rawData = kinect.getRawDepthData();

//Threahold of the point Cloud.

kinect.setLowThresholdPC(minD);

kinect.setHighThresholdPC(maxD);

}

PointCloudDepth example.

Color Point Cloud

Using the same idea as the point cloud vertex buffer is possible to render a point cloud with color from the information of the RGB frame. In the color point cloud example, just by adding an extra float buffer, the color frame, openGL unifies both buffers color and vertex. The positions of the point cloud are mapped to the color frame, so each vertex corresponds to a individual pixel of the RGB frame.

//obtain the point cloud positions

FloatBuffer pointCloudBuffer = kinect.getPointCloudColorPos();

//get the color for each point of the cloud Points

FloatBuffer colorBuffer = kinect.getColorChannelBuffer();

vertLoc = pgl.getAttribLocation(sh.glProgram, "vertex");

colorLoc = pgl.getAttribLocation(sh.glProgram, "color");

pgl.enableVertexAttribArray(vertLoc);

pgl.enableVertexAttribArray(colorLoc);

int vertData = kinect.WIDTHColor * kinect.HEIGHTColor;

pgl.vertexAttribPointer(vertLoc, 3, PGL.FLOAT, false, 0, pointCloudBuffer);

pgl.vertexAttribPointer(colorLoc, 3, PGL.FLOAT, false, 0, colorBuffer);

pgl.drawArrays(PGL.POINTS, 0, vertData);

pgl.disableVertexAttribArray(vertLoc);

pgl.disableVertexAttribArray(colorLoc);

sh.unbind();

endPGL();

popMatrix();

Point Cloud Color example.

Contour extraction with openCV

KinectPV2 library also includes a example in how to find contours using the openCV library by Greg Borenstein.

Initialize both classes KinectPV2 and openCV.

import gab.opencv.*;

import KinectPV2.*;

KinectPV2 kinect;

OpenCV opencv;

void setup() {

size(512*3, 424, P3D);

opencv = new OpenCV(this, 512, 424);

kinect = new KinectPV2(this);

kinect.enableDepthImg(true);

kinect.enableBodyTrackImg(true);

kinect.enablePointCloud(true);

kinect.init();

}

Process the contour from either use the mask Image(bodyIndex Frame) or the point cloud depth threshold frame. The good thing about using the point cloud is that you can set a minimal and maximal threshold distance from the Kinect to find contours. While the mask image you can extract an almost perfect silhouette from the user.

//change contour extraction from bodyIndexImg or to Depth

if (contourBodyIndex)

image(kinect.getBodyTrackImage(), 512, 0);

else

image(kinect.getPointCloudDepthImage(), 512, 0);

if (contourBodyIndex) {

opencv.loadImage(kinect.getBodyTrackImage());

opencv.gray();

opencv.threshold(threshold);

PImage dst = opencv.getOutput();

} else {

opencv.loadImage(kinect.getPointCloudDepthImage());

opencv.gray();

opencv.threshold(threshold);

PImage dst = opencv.getOutput();

}

ArrayList<Contour> contours = opencv.findContours(false, false);

if (contours.size() > 0) {

for (Contour contour : contours) {

contour.setPolygonApproximationFactor(polygonFactor);

if (contour.numPoints() > 50) {

stroke(0, 200, 200);

beginShape();

for (PVector point : contour.getPolygonApproximation ().getPoints()) {

vertex(point.x + 512*2, point.y);

}

endShape();

}

}

}

Find Contour openCV example.